"Testing is an infinite process of comparing the invisible to the ambiguous in order to avoid the unthinkable happening to the anonymous." — James Bach

This is the first article in a series of articles that will explore the creation of the dynamic software automation framework in contrast to today’s static test framework that fails to provide the enriching variability in the testing process. We will be focusing on new exciting concepts such as Artificial Intelligence, model-based testing, data generators, and test patterns to build a true state-of-the-art exploratory test framework.

There is no doubt that we are witnessing major shifts in the software development industry in its entirety. Ironically, though the role of a tester in the traditional sense is being diminished, the testing discipline as a whole is transforming from periphery activity into the heart of the software delivery process, for all roles from analysis/development to change management. The customer has moved from becoming a receiver of a defect-free software solution to being the defining factor of what a quality system is.

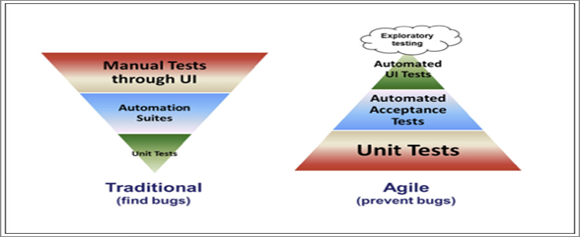

Quality is no longer being introduced into the system through the resolution of defects, rather, it has become the essence around which the system is built today. Nowhere is this concept (literally) turned upon its head, as is evident in the rotation of the traditional V model into the Test Pyramid.

Traditional V Model converted to the Test Pyramid

Traditional V Model converted to the Test Pyramid

Many articles have been written about test automation in TDD, BDD, and CI/CD, but we see a few blind spots that come naturally with high dependence on automation for large integrated systems that are a part of the larger organizational value chain. Statically, it includes repeating test runs for a myriad of issues. Examples of these are:

Defect Mutation: This is the principle that relates to how the system will be hardened in areas that are automation-focused, and weakened in other areas while diminishing in its fault tolerance capabilities.

Changing perspective & Context Testing: This is where issues can be found due to the variation in perspective being provided through the different roles involved in the testing process.

Tester Variability: The argument is that manual testers will vary in their tests (intentionally or not), and through increasing trials (law of large numbers) will stumble upon more latent defects.

Limitations of Exploratory Testing: This testing may be limited in scope and duration. Once a feature has been tested it is not re-visited in an exploratory fashion. This may also cause delays due to dependency on a manual process.

Crowd Testing Feasibility: While this a great approach it may not be feasible in supporting a 2-week agile delivery period, priority algorithms/data, and exposing features to competitors prior to them being market-ready.

Static Test Automation: Automation is manually improved and not organically evolved with the system or (data-driven) lessons learnt being easily shared across systems.

What is truly needed is a dynamic framework that builds upon the lower levels (of the unit, integration, and functional tests) and leverages the strengths of experienced testers that can be embedded into an exploratory test solution, while truly achieving economies of scale with expanding test automation frameworks across the enterprise.

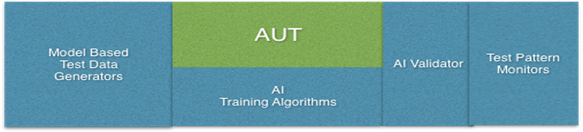

Components of the Dynamic Framework

Components of the Dynamic FrameworkModel-Based Test Generator: Data generators can generate large volumes of relevant and production-like data. These models can be built by test design principles (as discussed in this white paper by the founder of Qualitics, USA, Mr. Chandra Alluri - https://cs.gmu.edu/~offutt/documents/theses/chandraAlluri-thesis-final.pdf), Test Pattern Models, or by leveraging data lakes and/or existing production data.

Artificial Intelligence: AI can be trained to create internal models based on volumes of data and then be leveraged to run through data created by the system to identify potential erroneous data. To date, we’ve been able to replicate this process with 82.3% percent accuracy. This accuracy can be further improved with increasing and more accurate volumes of training data.

Application under Test: The AUT itself must be conceptualised in such a manner that makes it intrinsically testable and fault tolerant. Examples are, the generation of exceptions with data mismatches, and exposed test APIs to allow of integration with the testing framework (e.g. UI API log calls with browser exceptions). Another aspect is the ability to observe the fault tolerance and recoverability of the system to limit potential negative fallouts, providing the delivery team with a reasonable window for issue resolution.

Test Pattern Monitors: These are monitors (which will include an AI) that will continuously monitor the applications (and its data) for anomalies, exceptions and unexpected behaviors. There are many tools that can be leveraged such as Test Pattern Regexes, JMeter (for Java applications), DynaTrace, and SauceLabes (for cross-platform testing and monitoring).

Each of these components will be addressed in future articles with practical examples and working solutions. We will also explore the methodology with which we can create and deliver these solutions with minimal overheads as value-added products of your existing delivery process. Stay Tuned!!

To learn how to implement a dynamic software automation framework to test your digital products, get in touch with us today.